What is Docker?

Docker is an open-source platform that enables developers to automate the deployment and management of applications using containerization. Containers created with Docker include all the necessary dependencies, libraries, and system tools required to run the application within containers, which are lightweight and isolated environments.

Docker enables you to package applications with their dependencies into containers, ensuring that they can run consistently across different environments. Docker allows for consistent and reliable execution of applications across different environments, making it easier to develop, test, and deploy software.

Here are some key concepts related to Docker:

Containers: Containers are isolated environments that encapsulate an application and its dependencies. They provide a consistent and reproducible runtime environment, making it easier to deploy and run applications across different systems.

Images: Docker images are read-only templates that define the instructions for creating a container. An image is built from a set of instructions called a Dockerfile, which specifies the base image, application code, dependencies, and configuration.

Docker Engine: Docker Engine is the runtime environment that executes and manages containers. It consists of a server process, a REST API, and a command-line interface (CLI). The Docker Engine runs on the host machine and manages the lifecycle of containers.

Dockerfile: A Dockerfile is a text file that contains a set of instructions for building a Docker image. It specifies the base image, copies files into the image, set environment variables, and defines the commands to run when starting a container.

Docker Registry: A Docker Registry is a centralized repository for storing and sharing Docker images. The default public registry is Docker Hub, where you can find a vast collection of pre-built images. You can also set up private registries for internal use.

Docker Compose: Docker Compose is a tool that allows you to define and manage multi-container applications. With Compose, you can specify the configuration for multiple services, their dependencies, and network settings in a single YAML file.

Container V/S Virtual Machine

- Container:

A container is a lightweight, standalone, and executable software package that includes an application and all its dependencies.

Containers provide a way to isolate applications from the underlying system and from other containers while sharing the host operating system (OS) kernel.

Multiple containers can run on a single host machine, sharing the same OS kernel but with separate file systems and network interfaces.

Containers are portable, fast to start and stop, and have lower resource overhead compared to VMs.

- Virtual Machine (VM):

A virtual machine is a software emulation of a complete computer system, including the hardware components, an operating system, and applications.

VMs run on a physical host machine and use a hypervisor to manage and allocate the hardware resources. Each VM operates independently, with its own dedicated OS, file system, and network interfaces.

VMs allow running multiple operating systems and applications on a single physical host machine. VMs are isolated from each other and from the host system, providing strong isolation and security.

However, VMs typically have higher resource overhead compared to containers, as they require separate OS instances and consume more memory, disk space, and CPU.

Use Cases of Container and Virtual Machine

The choice between containers and virtual machines (VMs) depends on your specific requirements and the context in which you're working. Each technology has its strengths and is better suited for certain use cases:

- Containers are generally better when:

Resource Efficiency: Containers have lower resource overhead compared to VMs. They share the host operating system kernel, which reduces memory and CPU usage.

Scalability and Agility: Containers have faster startup times, enabling rapid deployment and scaling. They are designed to be lightweight and portable, making it easy to spin up multiple instances quickly.

Application Portability: Containers provide excellent portability. They encapsulate applications and their dependencies into a single package, making it easier to package, distribute, and deploy applications consistently across different environments.

Development and Testing: Containers streamline the development and testing processes. Developers can package their applications and dependencies into containers, ensuring consistent development and testing environments.

- Virtual Machines are generally better when:

Stronger Isolation: VMs provide stronger isolation than containers. Each VM runs its own operating system, providing complete separation between applications. This isolation is advantageous in scenarios where strict security boundaries or running different operating systems is required.

Legacy Applications: VMs are well-suited for running legacy applications that are not easily containerized. These applications might have complex dependencies or specific hardware requirements that are better supported by VMs.

Different Operating Systems: VMs allow running multiple operating systems on a single host machine, which can be useful for scenarios where compatibility with specific operating systems or running different OS versions is necessary.

Stability and Reliability: VMs provide stability and reliability since they operate on a separate virtualized hardware layer. They are less susceptible to issues that might affect the host machine or other VMs.

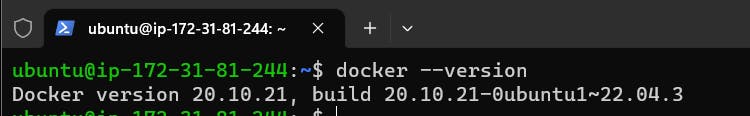

Installation of Docker

To install Docker in your system use the below command

sudo apt-get update sudo apt-get install docker.io -y # To check the version of installed Docker docker --version

We have to change the current user of the group to docker so that we don't need sudo every time we execute the docker command.

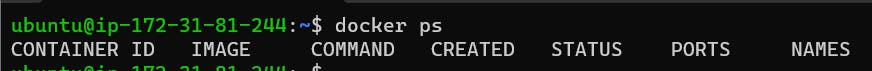

sudo usermod -aG docker $USER # reboot to session/terminnal to modify the changes sudo rebootTo check any running docker image:

docker ps

To stop a container

docker stop <container_id>To remove a container

docker rm <container_id>'systemctl’ command to manage docker services in most Linux

# start the docker service sudo systemctl start docker # stop the docker service sudo systemctl stop docker # restart the docker service sudo systemctl restart docker # check the status of the docker service sudo systemctl status docker # show the environment variables define in systemd file sudo systemctl show docker

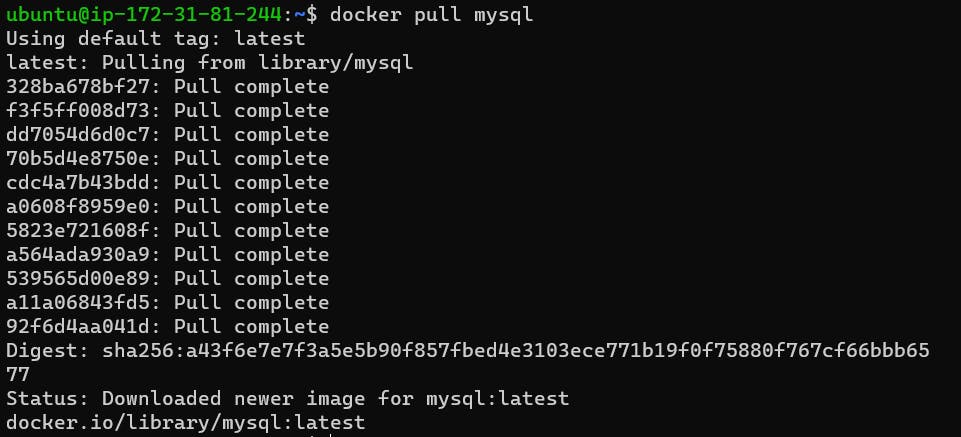

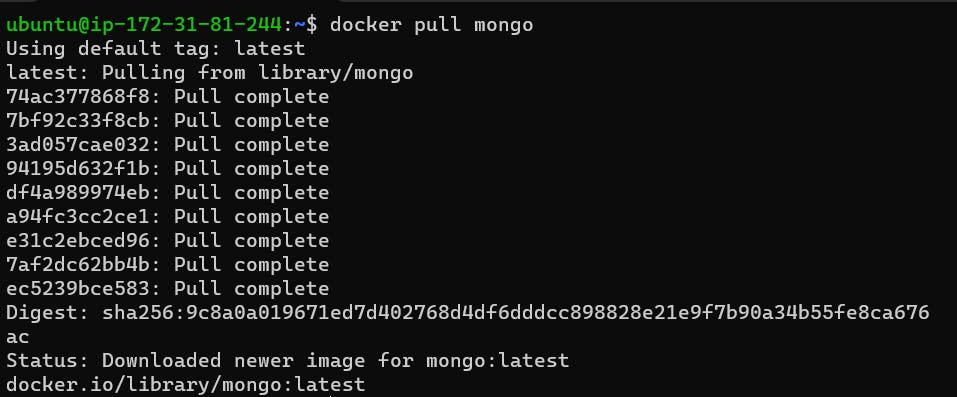

Installation of MySQL DB

To pull the latest version of MySQL image from the Docker server

docker pull mysql

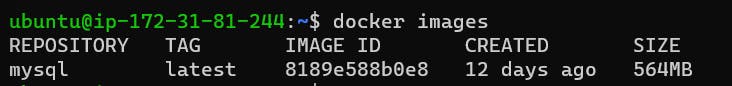

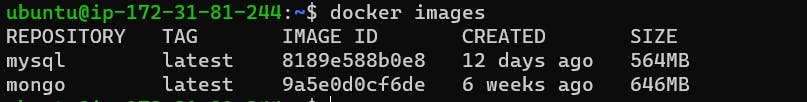

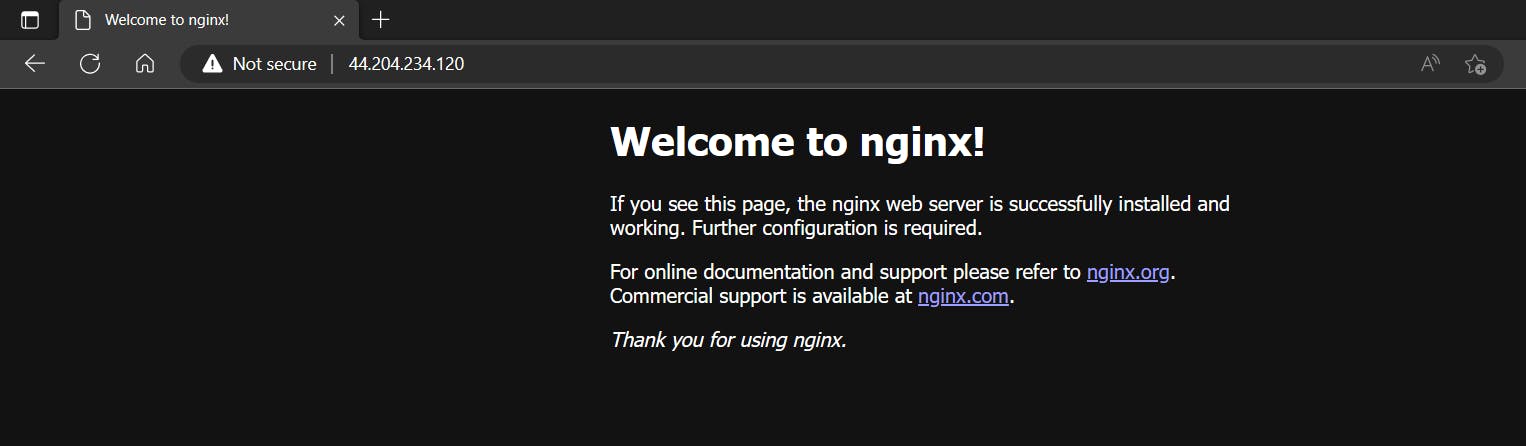

To view the current pulled image

docker images

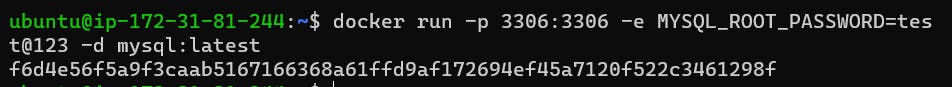

To create a container from MySQL image by providing a port number as well as setting up a root password.

docker run -p 3306:3306 -e MYSQL_ROOT_PASSWORD=<PASSWORD> -d mysql:latest # 3306 -> Port number for MySQL # -e -> Creating a new environment variable # MYSQL_ROOT_PASSWORD -> setting up the password for server # -d -> detached mode, it will execute in background no logs will showed # mysql:latest -> image_name:tag_associated_with_version

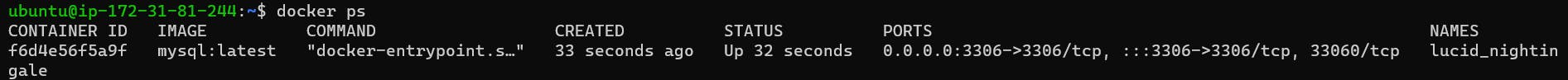

To show the running docker container

docker ps

To execute the docker container

docker exec -it <container_id> bashOnce the bash script started we can log in to the MySQL server using the password

mysql -u root -p # root is the default user name # Enter the password to login successfully

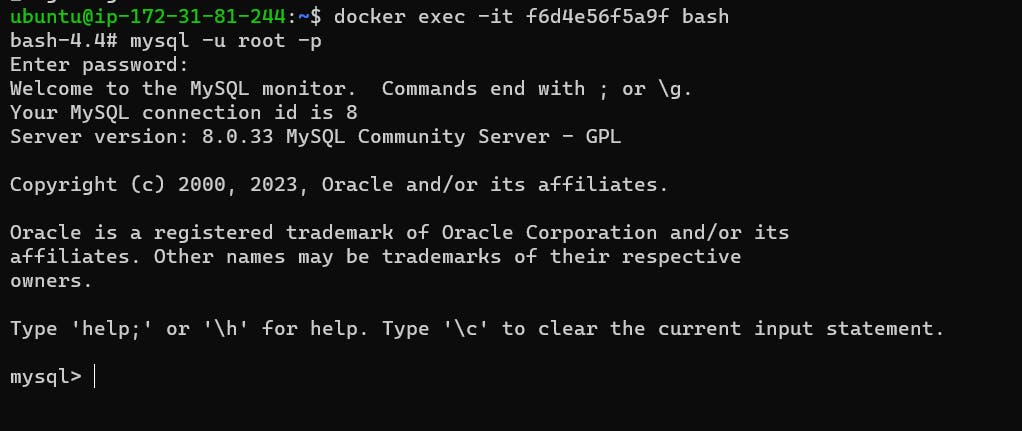

Installation of Mongo DB

To pull the latest version of Mongo image from the Docker server

docker pull mongo

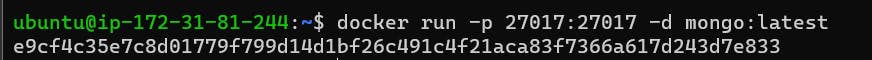

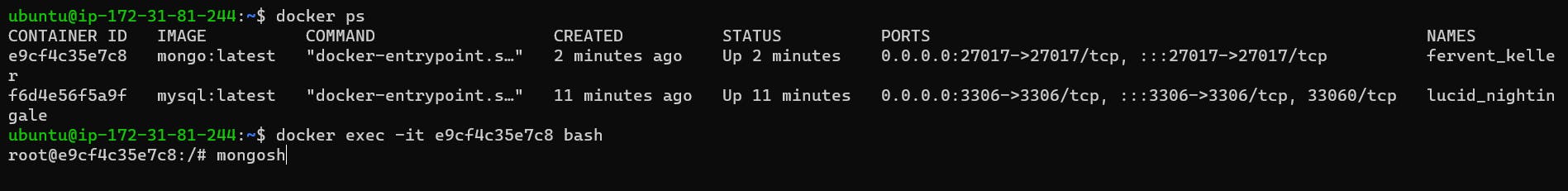

Using the docker run command we can start a new container by providing a port number

docker run -p 27017:27017 -d mongo:latest

Execute the interactive terminal using the bash shell

#59:05 docker exec -it <container_id> bashTo connect with the mongo we will use "mongosh"

mongosh # test> exit # to out from mongodb console

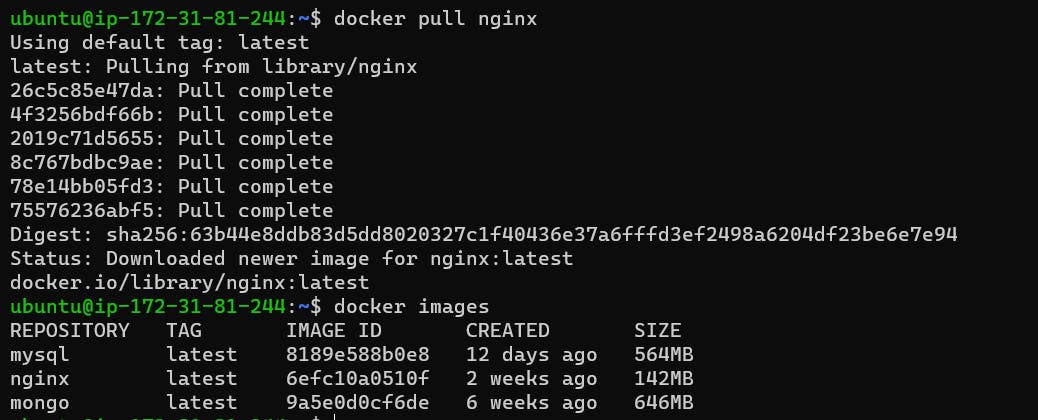

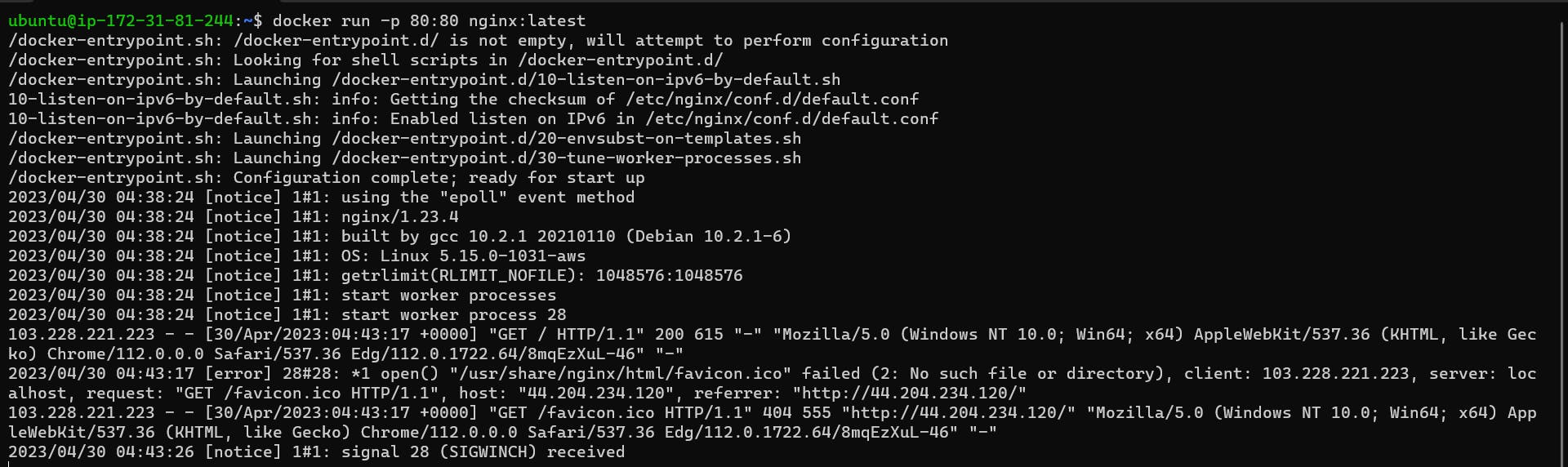

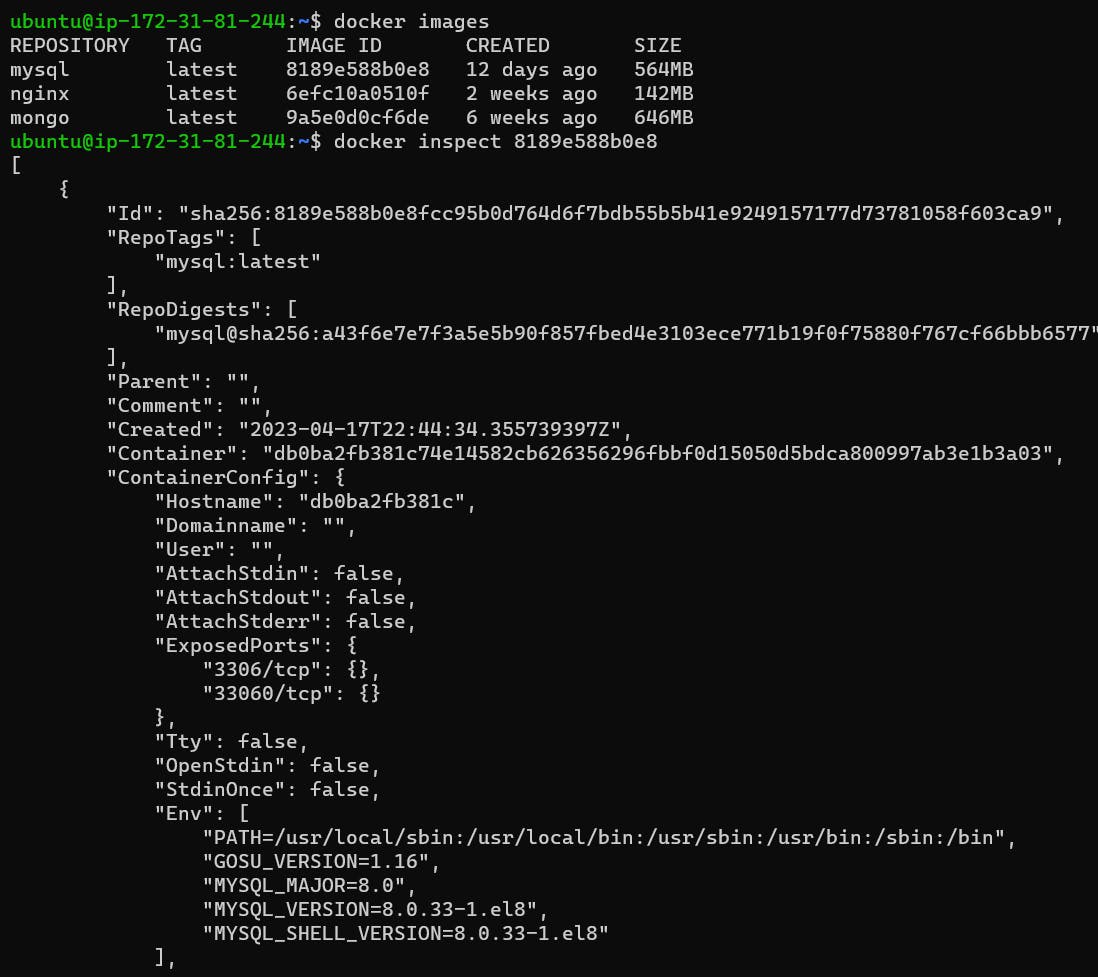

Installation of NGINX

To pull the latest version of NGINX image from the Docker server

docker pull nginx

To create a container from NGINX image

docker run -p 80:80 nginx:latest # copy the public IPv4 address and paste it in new tab to see the NGINX running

Task

Use the docker inspect command to view detailed information about a container or image.

#Get the docker image_id docker images docker inspect <image_id>

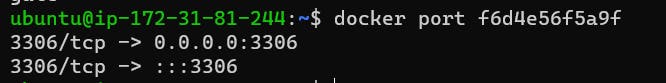

Use the docker port command to list the port mappings for a container.

docker port <container_id>

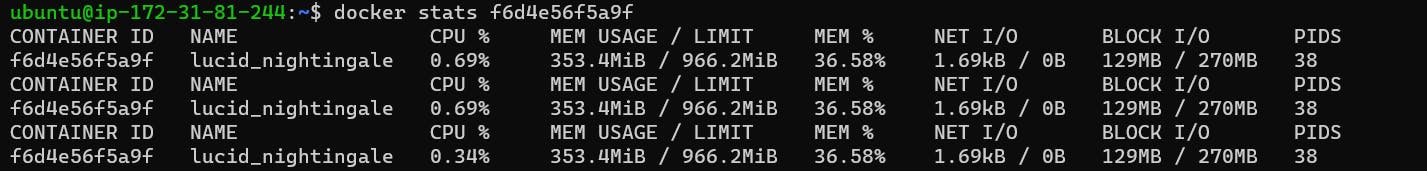

Use the docker stats command to view resource usage statistics for one or more containers.

docker stats <container_id>

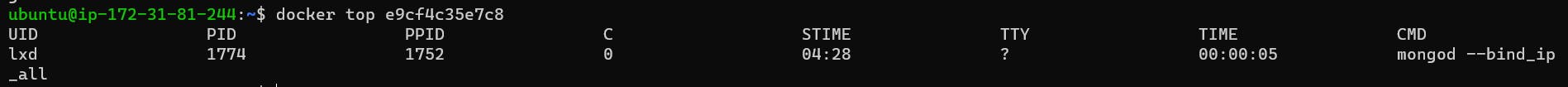

Use the docker top command to view the processes running inside a container.

docker top <container_id>

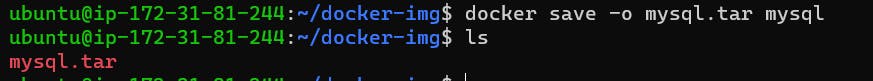

Use the docker save command to save an image to a tar archive.

docker save -o <file_name.tar> <image_name>

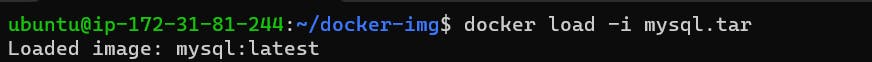

Use the docker load command to load an image from a tar archive.

docker load -i <file_name.tar>

Thank You,

I want to express my deepest gratitude to each and every one of you who has taken the time to read, engage, and support my journey as a becoming DevOps Engineer.

Feel free to reach out to me if any corrections or add-ons are required on blogs. Your feedback is always welcome & appreciated.

~ Abhisek Moharana 😊